| 1 | package net.deterlab.abac; |

|---|

| 2 | |

|---|

| 3 | import org.apache.commons.collections15.*; |

|---|

| 4 | import org.apache.commons.collections15.functors.*; |

|---|

| 5 | |

|---|

| 6 | import edu.uci.ics.jung.graph.*; |

|---|

| 7 | import edu.uci.ics.jung.graph.event.*; |

|---|

| 8 | import edu.uci.ics.jung.graph.util.*; |

|---|

| 9 | |

|---|

| 10 | import java.awt.geom.Point2D; |

|---|

| 11 | import java.io.*; |

|---|

| 12 | import java.util.*; |

|---|

| 13 | import java.util.zip.*; |

|---|

| 14 | import java.security.*; |

|---|

| 15 | import java.security.cert.*; |

|---|

| 16 | |

|---|

| 17 | import org.bouncycastle.asn1.*; |

|---|

| 18 | import org.bouncycastle.asn1.x509.*; |

|---|

| 19 | import org.bouncycastle.x509.*; |

|---|

| 20 | import org.bouncycastle.x509.util.*; |

|---|

| 21 | import org.bouncycastle.openssl.*; |

|---|

| 22 | |

|---|

| 23 | /** |

|---|

| 24 | * Represents a global graph of credentials in the form of principals and |

|---|

| 25 | * attributes. |

|---|

| 26 | */ |

|---|

| 27 | public class Context { |

|---|

| 28 | static final int ABAC_CERT_SUCCESS = 0; |

|---|

| 29 | static final int ABAC_CERT_INVALID = -1; |

|---|

| 30 | static final int ABAC_CERT_BAD_SIG = -2; |

|---|

| 31 | static final int ABAC_CERT_MISSING_ISSUER = -3; |

|---|

| 32 | |

|---|

| 33 | protected Graph<Role,Credential> g; |

|---|

| 34 | protected Set<Credential> derived_edges; |

|---|

| 35 | protected Query pq; |

|---|

| 36 | protected boolean dirty; |

|---|

| 37 | protected Set<Identity> identities; |

|---|

| 38 | |

|---|

| 39 | protected Map<String, String> nicknames; |

|---|

| 40 | protected Map<String, String> keys; |

|---|

| 41 | |

|---|

| 42 | public Context() { |

|---|

| 43 | /* create the graph */ |

|---|

| 44 | g = Graphs.<Role,Credential>synchronizedDirectedGraph( |

|---|

| 45 | new DirectedSparseGraph<Role,Credential>()); |

|---|

| 46 | derived_edges = new HashSet<Credential>(); |

|---|

| 47 | pq = new Query(g); |

|---|

| 48 | dirty = false; |

|---|

| 49 | identities = new TreeSet<Identity>(); |

|---|

| 50 | nicknames = new TreeMap<String, String>(); |

|---|

| 51 | keys = new TreeMap<String, String>(); |

|---|

| 52 | } |

|---|

| 53 | |

|---|

| 54 | public Context(Context c) { |

|---|

| 55 | this(); |

|---|

| 56 | for (Identity i: c.identities) |

|---|

| 57 | loadIDChunk(i); |

|---|

| 58 | for (Credential cr: c.credentials()) |

|---|

| 59 | loadAttributeChunk(cr); |

|---|

| 60 | derive_implied_edges(); |

|---|

| 61 | } |

|---|

| 62 | |

|---|

| 63 | public int loadIDFile(String fn) { return loadIDFile(new File(fn)); } |

|---|

| 64 | public int loadIDFile(File fn) { |

|---|

| 65 | try { |

|---|

| 66 | addIdentity(new Identity(fn)); |

|---|

| 67 | } |

|---|

| 68 | catch (SignatureException sig) { |

|---|

| 69 | return ABAC_CERT_BAD_SIG; |

|---|

| 70 | } |

|---|

| 71 | catch (Exception e) { |

|---|

| 72 | return ABAC_CERT_INVALID; |

|---|

| 73 | } |

|---|

| 74 | return ABAC_CERT_SUCCESS; |

|---|

| 75 | } |

|---|

| 76 | |

|---|

| 77 | public int loadIDChunk(Object c) { |

|---|

| 78 | try { |

|---|

| 79 | if (c instanceof Identity) |

|---|

| 80 | addIdentity((Identity) c); |

|---|

| 81 | else if (c instanceof String) |

|---|

| 82 | addIdentity(new Identity((String) c)); |

|---|

| 83 | else if (c instanceof File) |

|---|

| 84 | addIdentity(new Identity((File) c)); |

|---|

| 85 | else if (c instanceof X509Certificate) |

|---|

| 86 | addIdentity(new Identity((X509Certificate) c)); |

|---|

| 87 | else |

|---|

| 88 | return ABAC_CERT_INVALID; |

|---|

| 89 | } |

|---|

| 90 | catch (SignatureException sig) { |

|---|

| 91 | return ABAC_CERT_BAD_SIG; |

|---|

| 92 | } |

|---|

| 93 | catch (Exception e) { |

|---|

| 94 | return ABAC_CERT_INVALID; |

|---|

| 95 | } |

|---|

| 96 | return ABAC_CERT_SUCCESS; |

|---|

| 97 | } |

|---|

| 98 | |

|---|

| 99 | public int loadAttributeFile(String fn) { |

|---|

| 100 | return loadAttributeFile(new File(fn)); |

|---|

| 101 | } |

|---|

| 102 | |

|---|

| 103 | public int loadAttributeFile(File fn) { |

|---|

| 104 | try { |

|---|

| 105 | add_credential(new Credential(fn, identities)); |

|---|

| 106 | } |

|---|

| 107 | catch (InvalidKeyException sig) { |

|---|

| 108 | return ABAC_CERT_MISSING_ISSUER; |

|---|

| 109 | } |

|---|

| 110 | catch (Exception e) { |

|---|

| 111 | return ABAC_CERT_INVALID; |

|---|

| 112 | } |

|---|

| 113 | return ABAC_CERT_SUCCESS; |

|---|

| 114 | } |

|---|

| 115 | |

|---|

| 116 | public int loadAttributeChunk(Object c) { |

|---|

| 117 | try { |

|---|

| 118 | if (c instanceof Credential) |

|---|

| 119 | add_credential((Credential) c); |

|---|

| 120 | else if (c instanceof String) |

|---|

| 121 | add_credential(new Credential((String) c, identities)); |

|---|

| 122 | else if (c instanceof File) |

|---|

| 123 | add_credential(new Credential((File) c, identities)); |

|---|

| 124 | else if ( c instanceof X509V2AttributeCertificate) |

|---|

| 125 | add_credential(new Credential((X509V2AttributeCertificate)c, |

|---|

| 126 | identities)); |

|---|

| 127 | else |

|---|

| 128 | return ABAC_CERT_INVALID; |

|---|

| 129 | } |

|---|

| 130 | catch (SignatureException sig) { |

|---|

| 131 | return ABAC_CERT_BAD_SIG; |

|---|

| 132 | } |

|---|

| 133 | catch (Exception e) { |

|---|

| 134 | return ABAC_CERT_INVALID; |

|---|

| 135 | } |

|---|

| 136 | return ABAC_CERT_SUCCESS; |

|---|

| 137 | } |

|---|

| 138 | |

|---|

| 139 | public Collection<Credential> query(String role, String principal) { |

|---|

| 140 | derive_implied_edges(); |

|---|

| 141 | |

|---|

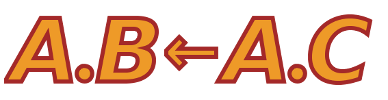

| 142 | Query q = new Query(g); |

|---|

| 143 | Graph<Role, Credential> rg = q.run(role, principal); |

|---|

| 144 | return rg.getEdges(); |

|---|

| 145 | } |

|---|

| 146 | |

|---|

| 147 | /** |

|---|

| 148 | * Returns a collection of the credentials in the graph. |

|---|

| 149 | */ |

|---|

| 150 | public Collection<Credential> credentials() { |

|---|

| 151 | Collection<Credential> creds = new HashSet<Credential>(); |

|---|

| 152 | |

|---|

| 153 | // only return creds with a cert: all others are derived edges |

|---|

| 154 | for (Credential cred : g.getEdges()) |

|---|

| 155 | if (cred.cert() != null) |

|---|

| 156 | creds.add(cred); |

|---|

| 157 | |

|---|

| 158 | return creds; |

|---|

| 159 | } |

|---|

| 160 | |

|---|

| 161 | |

|---|

| 162 | /** |

|---|

| 163 | * Returns a Query object which can be used to query the graph. This object |

|---|

| 164 | * becomes invalid if the graph is modified. |

|---|

| 165 | */ |

|---|

| 166 | public Query querier() { |

|---|

| 167 | derive_implied_edges(); |

|---|

| 168 | return new Query(g); |

|---|

| 169 | } |

|---|

| 170 | |

|---|

| 171 | /** |

|---|

| 172 | * Add a credential to the graph. |

|---|

| 173 | */ |

|---|

| 174 | public void add_credential(Credential cred) { |

|---|

| 175 | Role tail = cred.tail(); |

|---|

| 176 | Role head = cred.head(); |

|---|

| 177 | |

|---|

| 178 | /* explicitly add the vertices, otherwise get a null pointer exception */ |

|---|

| 179 | if ( !g.containsVertex(head)) |

|---|

| 180 | g.addVertex(head); |

|---|

| 181 | if ( !g.containsVertex(tail)) |

|---|

| 182 | g.addVertex(tail); |

|---|

| 183 | |

|---|

| 184 | if (!g.containsEdge(cred)) |

|---|

| 185 | g.addEdge(cred, tail, head); |

|---|

| 186 | |

|---|

| 187 | // add the prereqs of an intersection to the graph |

|---|

| 188 | if (tail.is_intersection()) |

|---|

| 189 | for (Role prereq : tail.prereqs()) |

|---|

| 190 | g.addVertex(prereq); |

|---|

| 191 | |

|---|

| 192 | dirty = true; |

|---|

| 193 | } |

|---|

| 194 | |

|---|

| 195 | /** |

|---|

| 196 | * Remove a credential from the graph. |

|---|

| 197 | */ |

|---|

| 198 | public void remove_credential(Credential cred) { |

|---|

| 199 | if (g.containsEdge(cred)) |

|---|

| 200 | g.removeEdge(cred); |

|---|

| 201 | dirty = true; |

|---|

| 202 | } |

|---|

| 203 | |

|---|

| 204 | /** |

|---|

| 205 | * Add a role w/o an edge |

|---|

| 206 | */ |

|---|

| 207 | public void add_vertex(Role v) { |

|---|

| 208 | if (!g.containsVertex(v)) { |

|---|

| 209 | g.addVertex(v); |

|---|

| 210 | dirty = true; |

|---|

| 211 | } |

|---|

| 212 | } |

|---|

| 213 | |

|---|

| 214 | public void remove_vertex(Role v) { |

|---|

| 215 | if (g.containsVertex(v)) { |

|---|

| 216 | g.removeVertex(v); |

|---|

| 217 | dirty = true; |

|---|

| 218 | } |

|---|

| 219 | } |

|---|

| 220 | public Collection<Role> roles() { |

|---|

| 221 | return g.getVertices(); |

|---|

| 222 | } |

|---|

| 223 | |

|---|

| 224 | /** |

|---|

| 225 | * Derive the implied edges in the graph, according to RT0 derivation rules. |

|---|

| 226 | * They are added to this graph. See "Distributed Credential Chain Discovery |

|---|

| 227 | * in Trust Management" by Ninghui Li et al. for details. Note that a |

|---|

| 228 | * derived linking edge can imply a new intersection edge and vice versa. |

|---|

| 229 | * Therefore we iteratively derive edges, giving up when an iteration |

|---|

| 230 | * produces 0 new edges. |

|---|

| 231 | */ |

|---|

| 232 | protected synchronized void derive_implied_edges() { |

|---|

| 233 | // nothing to do on a clean graph |

|---|

| 234 | if (!dirty) |

|---|

| 235 | return; |

|---|

| 236 | |

|---|

| 237 | clear_old_edges(); |

|---|

| 238 | |

|---|

| 239 | // iteratively derive links. continue as long as new links are added |

|---|

| 240 | while (derive_links_iter() > 0) |

|---|

| 241 | ; |

|---|

| 242 | dirty = false; |

|---|

| 243 | } |

|---|

| 244 | |

|---|

| 245 | /** |

|---|

| 246 | * Single iteration of deriving implied edges. Returns the number of new |

|---|

| 247 | * links added. |

|---|

| 248 | */ |

|---|

| 249 | protected int derive_links_iter() { |

|---|

| 250 | int count = 0; |

|---|

| 251 | |

|---|

| 252 | /* for every node in the graph.. */ |

|---|

| 253 | for (Role vertex : g.getVertices()) { |

|---|

| 254 | if (vertex.is_intersection()) { |

|---|

| 255 | // for each prereq edge: |

|---|

| 256 | // find set of principals that have the prereq |

|---|

| 257 | // find the intersection of all sets (i.e., principals that satisfy all prereqs) |

|---|

| 258 | // for each principal in intersection: |

|---|

| 259 | // add derived edge |

|---|

| 260 | |

|---|

| 261 | Set<Role> principals = null; |

|---|

| 262 | |

|---|

| 263 | for (Role prereq : vertex.prereqs()) { |

|---|

| 264 | Set<Role> cur_principals = pq.find_principals(prereq); |

|---|

| 265 | |

|---|

| 266 | if (principals == null) |

|---|

| 267 | principals = cur_principals; |

|---|

| 268 | else |

|---|

| 269 | // no, they couldn't just call it "intersection" |

|---|

| 270 | principals.retainAll(cur_principals); |

|---|

| 271 | |

|---|

| 272 | if (principals.size() == 0) |

|---|

| 273 | break; |

|---|

| 274 | } |

|---|

| 275 | |

|---|

| 276 | // add em |

|---|

| 277 | for (Role principal : principals) |

|---|

| 278 | if (add_derived_edge(vertex, principal)) |

|---|

| 279 | ++count; |

|---|

| 280 | } |

|---|

| 281 | |

|---|

| 282 | else if (vertex.is_linking()) { |

|---|

| 283 | // make the rest of the code a bit clearer |

|---|

| 284 | Role A_r1_r2 = vertex; |

|---|

| 285 | |

|---|

| 286 | Role A_r1 = new Role(A_r1_r2.A_r1()); |

|---|

| 287 | String r2 = A_r1_r2.r2(); |

|---|

| 288 | |

|---|

| 289 | /* locate the node A.r1 */ |

|---|

| 290 | if (!g.containsVertex(A_r1)) continue; /* boring: nothing of the form A.r1 */ |

|---|

| 291 | |

|---|

| 292 | /* for each B that satisfies A_r1 */ |

|---|

| 293 | for (Role principal : pq.find_principals(A_r1)) { |

|---|

| 294 | Role B_r2 = new Role(principal + "." + r2); |

|---|

| 295 | if (!g.containsVertex(B_r2)) continue; |

|---|

| 296 | |

|---|

| 297 | if (add_derived_edge(A_r1_r2, B_r2)) |

|---|

| 298 | ++count; |

|---|

| 299 | } |

|---|

| 300 | } |

|---|

| 301 | } |

|---|

| 302 | |

|---|

| 303 | return count; |

|---|

| 304 | } |

|---|

| 305 | |

|---|

| 306 | /** |

|---|

| 307 | * Add a derived edge in the graph. Returns true only if the edge does not |

|---|

| 308 | * exist. |

|---|

| 309 | */ |

|---|

| 310 | protected boolean add_derived_edge(Role head, Role tail) { |

|---|

| 311 | // edge exists: return false |

|---|

| 312 | if (g.findEdge(tail, head) != null) |

|---|

| 313 | return false; |

|---|

| 314 | |

|---|

| 315 | // add the new edge |

|---|

| 316 | Credential derived_edge = new Credential(head, tail); |

|---|

| 317 | derived_edges.add(derived_edge); |

|---|

| 318 | g.addEdge(derived_edge, tail, head); |

|---|

| 319 | |

|---|

| 320 | return true; |

|---|

| 321 | } |

|---|

| 322 | |

|---|

| 323 | /** |

|---|

| 324 | * Clear the derived edges that currently exist in the graph. This is done |

|---|

| 325 | * before the edges are rederived. The derived edges in filtered graphs are |

|---|

| 326 | * also cleared. |

|---|

| 327 | */ |

|---|

| 328 | protected void clear_old_edges() { |

|---|

| 329 | for (Credential i: derived_edges) |

|---|

| 330 | g.removeEdge(i); |

|---|

| 331 | derived_edges = new HashSet<Credential>(); |

|---|

| 332 | } |

|---|

| 333 | /** |

|---|

| 334 | * Put the Identity into the set of ids used to validate certificates. |

|---|

| 335 | * Also put the keyID and name into the translation mappings used by Roles |

|---|

| 336 | * to pretty print. In the role mapping, if multiple ids use the same |

|---|

| 337 | * common name they are disambiguated. Only one entry for keyid is |

|---|

| 338 | * allowed. |

|---|

| 339 | */ |

|---|

| 340 | protected void addIdentity(Identity id) { |

|---|

| 341 | identities.add(id); |

|---|

| 342 | if (id.getName() != null && id.getKeyID() != null) { |

|---|

| 343 | if ( !keys.containsKey(id.getKeyID()) ) { |

|---|

| 344 | String name = id.getName(); |

|---|

| 345 | int n= 1; |

|---|

| 346 | |

|---|

| 347 | while (nicknames.containsKey(name)) { |

|---|

| 348 | name = id.getName() + n++; |

|---|

| 349 | } |

|---|

| 350 | nicknames.put(name, id.getKeyID()); |

|---|

| 351 | keys.put(id.getKeyID(), name); |

|---|

| 352 | } |

|---|

| 353 | } |

|---|

| 354 | } |

|---|

| 355 | /** |

|---|

| 356 | * Translate either keys to nicknames or vice versa. Break the string into |

|---|

| 357 | * space separated tokens and then each of them into period separated |

|---|

| 358 | * strings. If any of the smallest strings is in the map, replace it with |

|---|

| 359 | * the value. |

|---|

| 360 | */ |

|---|

| 361 | protected String replace(String is, Map<String, String> m) { |

|---|

| 362 | String rv = ""; |

|---|

| 363 | for (String tok: is.split(" ")) { |

|---|

| 364 | String term = ""; |

|---|

| 365 | for (String s: tok.split("\\.")) { |

|---|

| 366 | String next = m.containsKey(s) ? m.get(s) : s; |

|---|

| 367 | |

|---|

| 368 | if (term.isEmpty()) term = next; |

|---|

| 369 | else term += "." + next; |

|---|

| 370 | } |

|---|

| 371 | if (rv.isEmpty()) rv = term; |

|---|

| 372 | else rv += " " + term; |

|---|

| 373 | } |

|---|

| 374 | return rv; |

|---|

| 375 | } |

|---|

| 376 | |

|---|

| 377 | public String expandKeyID(String s) { return replace(s, nicknames); } |

|---|

| 378 | public String expandNickname(String s) { return replace(s, keys); } |

|---|

| 379 | |

|---|

| 380 | /** |

|---|

| 381 | * Import a zip file. First import all the identities |

|---|

| 382 | * (pem), then the credentials (der) into the credential graph then any |

|---|

| 383 | * alias files into the two maps. If keys is not null, any key pairs in |

|---|

| 384 | * PEM files are put in there. If errors is not null, errors reading files |

|---|

| 385 | * are added indexed by filename. |

|---|

| 386 | */ |

|---|

| 387 | public void readZipFile(File zf, Collection<KeyPair> keys, |

|---|

| 388 | Map<String, Exception> errors) throws IOException { |

|---|

| 389 | Vector<ZipEntry> derEntries = new Vector<ZipEntry>(); |

|---|

| 390 | Map<String, Identity> ids = new TreeMap<String, Identity>(); |

|---|

| 391 | Map<String, KeyPair> kps = new TreeMap<String, KeyPair>(); |

|---|

| 392 | |

|---|

| 393 | ZipFile z = new ZipFile(zf); |

|---|

| 394 | |

|---|

| 395 | for (Enumeration<? extends ZipEntry> ze = z.entries(); |

|---|

| 396 | ze.hasMoreElements();) { |

|---|

| 397 | ZipEntry f = ze.nextElement(); |

|---|

| 398 | try { |

|---|

| 399 | PEMReader r = new PEMReader( |

|---|

| 400 | new InputStreamReader(z.getInputStream(f))); |

|---|

| 401 | Object o = readPEM(r); |

|---|

| 402 | |

|---|

| 403 | if ( o != null ) { |

|---|

| 404 | if (o instanceof Identity) { |

|---|

| 405 | Identity i = (Identity) o; |

|---|

| 406 | String kid = i.getKeyID(); |

|---|

| 407 | |

|---|

| 408 | if (kps.containsKey(kid) ) { |

|---|

| 409 | i.setKeyPair(kps.get(kid)); |

|---|

| 410 | kps.remove(kid); |

|---|

| 411 | } |

|---|

| 412 | else if (i.getKeyPair() == null ) |

|---|

| 413 | ids.put(i.getKeyID(), i); |

|---|

| 414 | |

|---|

| 415 | loadIDChunk(i); |

|---|

| 416 | } |

|---|

| 417 | else if (o instanceof KeyPair ) { |

|---|

| 418 | KeyPair kp = (KeyPair) o; |

|---|

| 419 | String kid = extractKeyID(kp.getPublic()); |

|---|

| 420 | |

|---|

| 421 | if (ids.containsKey(kid)) { |

|---|

| 422 | Identity i = ids.get(kid); |

|---|

| 423 | |

|---|

| 424 | i.setKeyPair(kp); |

|---|

| 425 | ids.remove(kid); |

|---|

| 426 | } |

|---|

| 427 | else { |

|---|

| 428 | kps.put(kid, kp); |

|---|

| 429 | } |

|---|

| 430 | } |

|---|

| 431 | } |

|---|

| 432 | else { |

|---|

| 433 | // Not a PEM file |

|---|

| 434 | derEntries.add(f); |

|---|

| 435 | continue; |

|---|

| 436 | } |

|---|

| 437 | } |

|---|

| 438 | catch (Exception e ) { |

|---|

| 439 | if (errors != null ) errors.put(f.getName(), e); |

|---|

| 440 | } |

|---|

| 441 | } |

|---|

| 442 | |

|---|

| 443 | for ( ZipEntry f : derEntries ) { |

|---|

| 444 | try { |

|---|

| 445 | add_credential(new Credential(z.getInputStream(f), identities)); |

|---|

| 446 | } |

|---|

| 447 | catch (Exception e ) { |

|---|

| 448 | if (errors != null ) errors.put(f.getName(), e); |

|---|

| 449 | } |

|---|

| 450 | } |

|---|

| 451 | } |

|---|

| 452 | |

|---|

| 453 | public void readZipFile(File d) |

|---|

| 454 | throws IOException { |

|---|

| 455 | readZipFile(d, null, null); |

|---|

| 456 | } |

|---|

| 457 | public void readZipFile(File d, |

|---|

| 458 | Map<String, Exception> errors) throws IOException { |

|---|

| 459 | readZipFile(d, null, errors); |

|---|

| 460 | } |

|---|

| 461 | public void readZipFile(File d, |

|---|

| 462 | Collection<KeyPair> keys) throws IOException { |

|---|

| 463 | readZipFile(d, keys, null); |

|---|

| 464 | } |

|---|

| 465 | |

|---|

| 466 | protected Object readPEM(PEMReader r) throws IOException { |

|---|

| 467 | Identity i = null; |

|---|

| 468 | KeyPair keys = null; |

|---|

| 469 | Object o = null; |

|---|

| 470 | |

|---|

| 471 | while ( (o = r.readObject()) != null ) { |

|---|

| 472 | if (o instanceof X509Certificate) { |

|---|

| 473 | if ( i == null ) { |

|---|

| 474 | try { |

|---|

| 475 | i = new Identity((X509Certificate)o); |

|---|

| 476 | } |

|---|

| 477 | catch (Exception e) { |

|---|

| 478 | // Translate Idenitiy exceptions to IOException |

|---|

| 479 | throw new IOException(e); |

|---|

| 480 | } |

|---|

| 481 | if (keys != null ) { |

|---|

| 482 | i.setKeyPair(keys); |

|---|

| 483 | keys = null; |

|---|

| 484 | } |

|---|

| 485 | } |

|---|

| 486 | else throw new IOException("Two certificates"); |

|---|

| 487 | } |

|---|

| 488 | else if (o instanceof KeyPair ) { |

|---|

| 489 | if ( i != null ) i.setKeyPair((KeyPair) o); |

|---|

| 490 | else keys = (KeyPair) o; |

|---|

| 491 | } |

|---|

| 492 | else { |

|---|

| 493 | throw new IOException("Unexpected PEM object: " + |

|---|

| 494 | o.getClass().getName()); |

|---|

| 495 | } |

|---|

| 496 | } |

|---|

| 497 | |

|---|

| 498 | if ( i != null ) return i; |

|---|

| 499 | else if ( keys != null) return keys; |

|---|

| 500 | else return null; |

|---|

| 501 | } |

|---|

| 502 | |

|---|

| 503 | /** |

|---|

| 504 | * Import a directory full of files. First import all the identities |

|---|

| 505 | * (pem), then the credentials (der) into the credential graph then any |

|---|

| 506 | * alias files into the two maps. If keys is not null, any key pairs in |

|---|

| 507 | * PEM files are put in there. If errors is not null, errors reading files |

|---|

| 508 | * are added indexed by filename. |

|---|

| 509 | */ |

|---|

| 510 | public void readDirectory(File d, Collection<KeyPair> keys, |

|---|

| 511 | Map<String, Exception> errors) { |

|---|

| 512 | Vector<File> derFiles = new Vector<File>(); |

|---|

| 513 | Collection<File> files = new Vector<File>(); |

|---|

| 514 | Map<String, Identity> ids = new TreeMap<String, Identity>(); |

|---|

| 515 | Map<String, KeyPair> kps = new TreeMap<String, KeyPair>(); |

|---|

| 516 | |

|---|

| 517 | if (d.isDirectory() ) |

|---|

| 518 | for (File f : d.listFiles()) |

|---|

| 519 | files.add(f); |

|---|

| 520 | else files.add(d); |

|---|

| 521 | |

|---|

| 522 | for (File f: files ) { |

|---|

| 523 | try { |

|---|

| 524 | PEMReader r = new PEMReader(new FileReader(f)); |

|---|

| 525 | Object o = readPEM(r); |

|---|

| 526 | |

|---|

| 527 | if ( o != null ) { |

|---|

| 528 | if (o instanceof Identity) { |

|---|

| 529 | Identity i = (Identity) o; |

|---|

| 530 | String kid = i.getKeyID(); |

|---|

| 531 | |

|---|

| 532 | if (kps.containsKey(kid) ) { |

|---|

| 533 | i.setKeyPair(kps.get(kid)); |

|---|

| 534 | kps.remove(kid); |

|---|

| 535 | } |

|---|

| 536 | else if (i.getKeyPair() == null ) |

|---|

| 537 | ids.put(i.getKeyID(), i); |

|---|

| 538 | |

|---|

| 539 | loadIDChunk(i); |

|---|

| 540 | } |

|---|

| 541 | else if (o instanceof KeyPair ) { |

|---|

| 542 | KeyPair kp = (KeyPair) o; |

|---|

| 543 | String kid = extractKeyID(kp.getPublic()); |

|---|

| 544 | |

|---|

| 545 | if (ids.containsKey(kid)) { |

|---|

| 546 | Identity i = ids.get(kid); |

|---|

| 547 | |

|---|

| 548 | i.setKeyPair(kp); |

|---|

| 549 | ids.remove(kid); |

|---|

| 550 | } |

|---|

| 551 | else { |

|---|

| 552 | kps.put(kid, kp); |

|---|

| 553 | } |

|---|

| 554 | } |

|---|

| 555 | } |

|---|

| 556 | else { |

|---|

| 557 | // Not a PEM file |

|---|

| 558 | derFiles.add(f); |

|---|

| 559 | continue; |

|---|

| 560 | } |

|---|

| 561 | } |

|---|

| 562 | catch (Exception e ) { |

|---|

| 563 | if (errors != null ) errors.put(f.getName(), e); |

|---|

| 564 | } |

|---|

| 565 | } |

|---|

| 566 | |

|---|

| 567 | for ( File f : derFiles ) { |

|---|

| 568 | try { |

|---|

| 569 | add_credential(new Credential(f, identities)); |

|---|

| 570 | } |

|---|

| 571 | catch (Exception e ) { |

|---|

| 572 | if (errors != null ) errors.put(f.getName(), e); |

|---|

| 573 | } |

|---|

| 574 | } |

|---|

| 575 | } |

|---|

| 576 | |

|---|

| 577 | public void readDirectory(File d) { |

|---|

| 578 | readDirectory(d, null, null); |

|---|

| 579 | } |

|---|

| 580 | public void readDirectory(File d, Map<String, Exception> errors) { |

|---|

| 581 | readDirectory(d, null, errors); |

|---|

| 582 | } |

|---|

| 583 | public void readDirectory(File d, Collection<KeyPair> keys) { |

|---|

| 584 | readDirectory(d, keys, null); |

|---|

| 585 | } |

|---|

| 586 | |

|---|

| 587 | public void writeZipFile(File f, boolean allIDs, boolean withPrivateKeys) |

|---|

| 588 | throws IOException { |

|---|

| 589 | ZipOutputStream z = new ZipOutputStream(new FileOutputStream(f)); |

|---|

| 590 | Set<Identity> ids = allIDs ? identities : new TreeSet<Identity>(); |

|---|

| 591 | |

|---|

| 592 | int n = 0; |

|---|

| 593 | for (Credential c: credentials()) { |

|---|

| 594 | z.putNextEntry(new ZipEntry("attr" + n++ + ".der")); |

|---|

| 595 | c.write(z); |

|---|

| 596 | z.closeEntry(); |

|---|

| 597 | if ( c.getID() != null && !allIDs) ids.add(c.getID()); |

|---|

| 598 | } |

|---|

| 599 | for (Identity i: ids) { |

|---|

| 600 | z.putNextEntry(new ZipEntry(i.getName() + ".pem")); |

|---|

| 601 | i.write(z); |

|---|

| 602 | if (withPrivateKeys) |

|---|

| 603 | i.writePrivateKey(z); |

|---|

| 604 | z.closeEntry(); |

|---|

| 605 | } |

|---|

| 606 | z.close(); |

|---|

| 607 | } |

|---|

| 608 | |

|---|

| 609 | /** |

|---|

| 610 | * Get to the SHA1 hash of the key. |

|---|

| 611 | */ |

|---|

| 612 | public static String extractKeyID(PublicKey k) { |

|---|

| 613 | SubjectPublicKeyInfo ki = extractSubjectPublicKeyInfo(k); |

|---|

| 614 | SubjectKeyIdentifier id = |

|---|

| 615 | SubjectKeyIdentifier.createSHA1KeyIdentifier(ki); |

|---|

| 616 | |

|---|

| 617 | // Now format it into a string for keeps |

|---|

| 618 | Formatter fmt = new Formatter(new StringWriter()); |

|---|

| 619 | for (byte b: id.getKeyIdentifier()) |

|---|

| 620 | fmt.format("%02x", b); |

|---|

| 621 | return fmt.out().toString(); |

|---|

| 622 | } |

|---|

| 623 | |

|---|

| 624 | /** |

|---|

| 625 | * Extratct the SubjectPublicKeyInfo. Useful for some other encryptions, |

|---|

| 626 | * notably Certificate.make_cert(). |

|---|

| 627 | */ |

|---|

| 628 | public static SubjectPublicKeyInfo extractSubjectPublicKeyInfo( |

|---|

| 629 | PublicKey k) { |

|---|

| 630 | ASN1Sequence seq = null; |

|---|

| 631 | try { |

|---|

| 632 | seq = (ASN1Sequence) new ASN1InputStream( |

|---|

| 633 | k.getEncoded()).readObject(); |

|---|

| 634 | } |

|---|

| 635 | catch (IOException ie) { |

|---|

| 636 | // Badly formatted key?? |

|---|

| 637 | return null; |

|---|

| 638 | } |

|---|

| 639 | return new SubjectPublicKeyInfo(seq); |

|---|

| 640 | } |

|---|

| 641 | |

|---|

| 642 | |

|---|

| 643 | } |

|---|